The Trillion-Dollar Engine: Mapping the Compute, Cash, and Contracts Behind OpenAI

Introduction

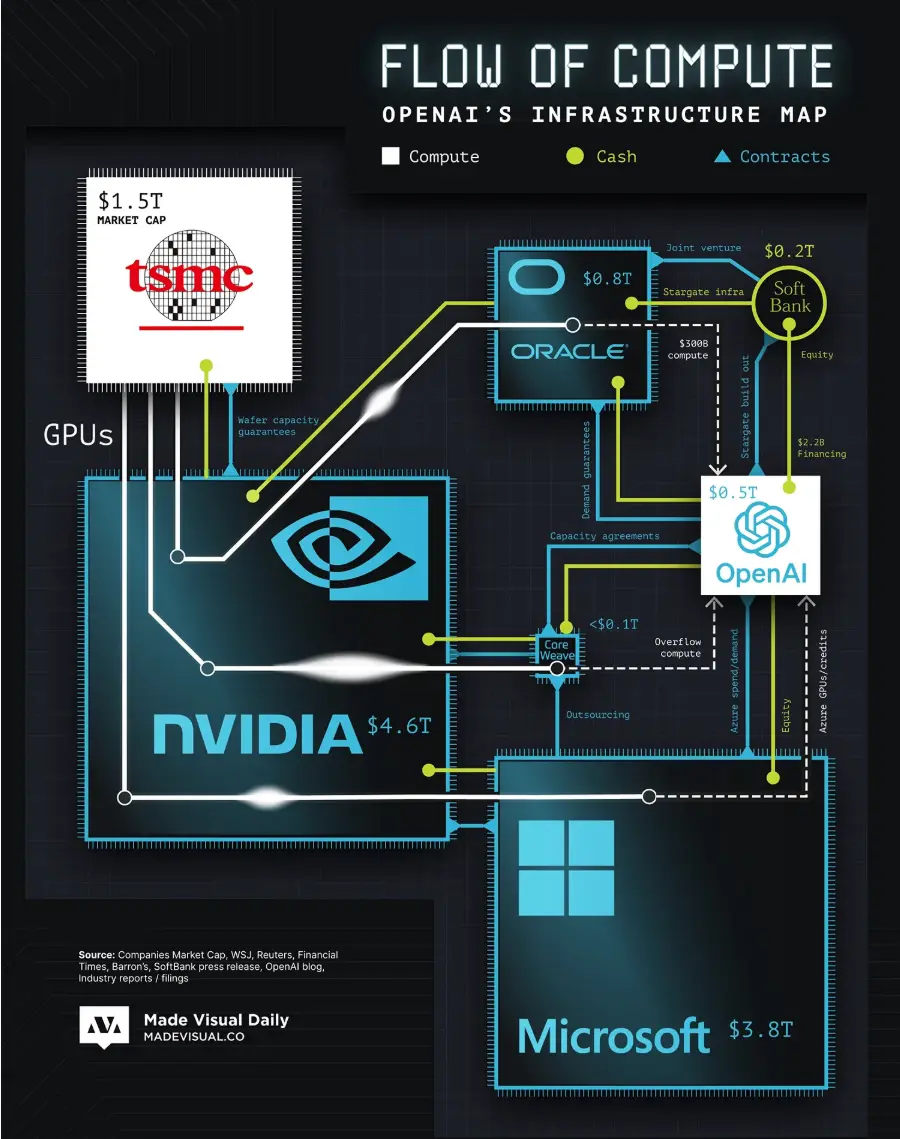

OpenAI's groundbreaking models, such as ChatGPT, appear to function with seamless digital magic. However, beneath this user-friendly interface lies a sprawling and intricate infrastructure built on a complex web of compute power, massive capital investments, and multi-billion dollar contracts. A recent visualization from Visual Capitalist, based on work by Made Visual Daily, maps this high-stakes ecosystem, revealing a tightly interwoven and potentially fragile network of dependencies that powers the current generative AI boom 1.

The Titans of the AI Ecosystem

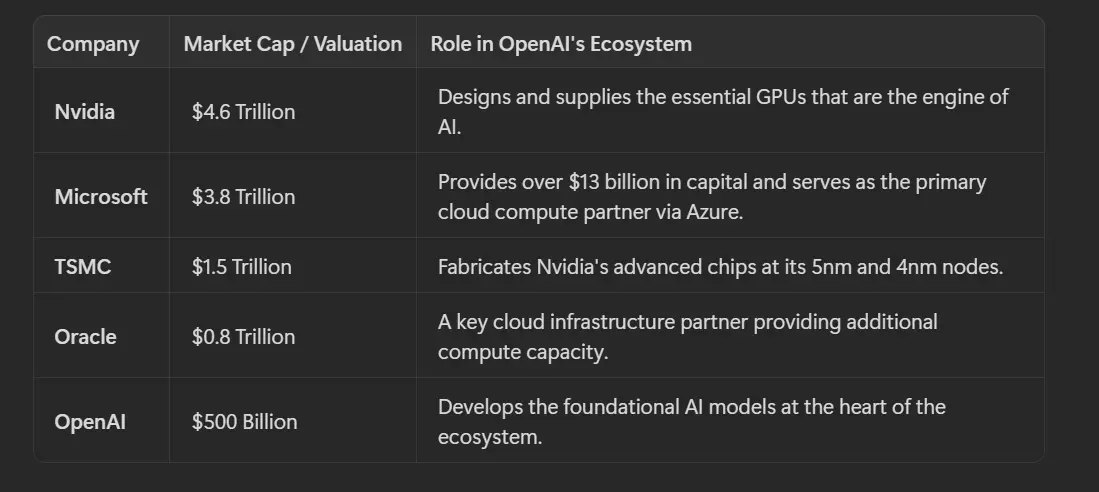

The development and deployment of large-scale AI are dominated by a handful of trillion-dollar entities. OpenAI, with its recent valuation of around $500 billion, sits at the center of this ecosystem, but it is critically dependent on a network of corporate giants for its operations. These relationships involve a circular flow of capital and resources, creating a unique and highly concentrated industrial

The Global GPU Supply Chain: A Fragile Lifeline

The engine behind OpenAI's success is the Nvidia GPU, particularly the highly sought-after H100 chip. However, the journey of a single GPU from design to deployment is a global and fragile process:

1.Design: Nvidia designs the chips in-house in the United States.

2.Fabrication: The designs are sent to Taiwan, where TSMC fabricates the complex silicon wafers.

3.Assembly: Firms like Quanta and Foxconn package and test the finished chips.

4.Deployment: Server manufacturers such as Supermicro integrate the GPUs into AI-optimized server racks.

5.Delivery: Finally, these server clusters are shipped to cloud providers like Microsoft Azure and the rapidly growing CoreWeave, where OpenAI and others access them.

This multi-stage supply chain is vulnerable to geopolitical tensions, economic shifts, and logistical disruptions. The intense demand has transformed high-end GPUs into a new strategic commodity, with some firms even using their GPU allocations as collateral for financing 1.

The Closed-Loop System and AI Bubble Risk

What makes the current AI landscape unique is the creation of a "closed-loop" system of capital and resources. Microsoft has invested over $13 billion into OpenAI, which in turn spends a significant portion of that capital on compute services from Microsoft's Azure cloud—services that run on Nvidia GPUs procured by Microsoft. This creates a circular flow where investment dollars are funneled back to the initial investors and their key suppliers.

This interdependence extends further. Microsoft is also a primary customer of CoreWeave, another major cloud provider that purchases massive volumes of Nvidia hardware. This creates a tightly coupled ecosystem where the same dollars and chips circulate among a small group of dominant companies.

Analysts have raised concerns that this closed-loop structure could pose a systemic risk, potentially inflating an AI bubble. The deep financial and operational entanglement means that a sudden change in demand, a disruption in the supply chain, or a shift in funding conditions could send shockwaves throughout the entire sector, magnifying the impact of any single point of failure 1.

Conclusion

The infrastructure powering OpenAI is a marvel of modern engineering and finance, but it is also a testament to the concentrated and interdependent nature of the AI industry. The reliance on a handful of companies for critical components—from chip fabrication to cloud compute—creates both immense efficiencies and significant risks. As the AI revolution continues to accelerate, understanding this intricate web of compute, cash, and contracts is essential to navigating its opportunities and potential pitfalls.

SiniSa Dagary, www.sinisadagary.com